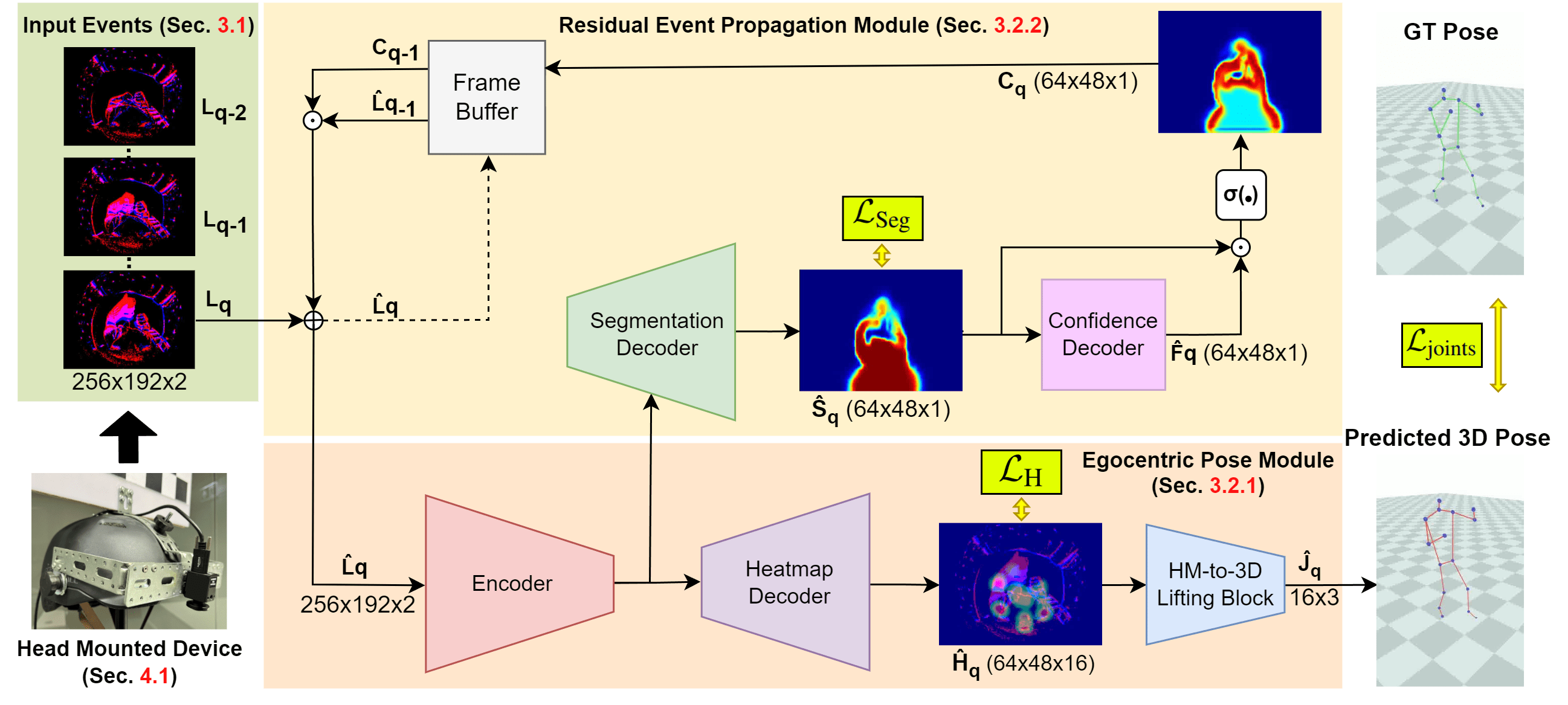

Monocular egocentric 3D human motion capture remains a significant challenge, particularly under conditions of low lighting and fast movements, which are common in head-mounted device applications. Existing methods that rely on RGB cameras often fail under these conditions. To address these limitations, we introduce EventEgo3D++, the first approach that leverages a monocular event camera with a fisheye lens for 3D human motion capture. Event cameras excel in high-speed scenarios and varying illumination due to their high temporal resolution, providing reliable cues for accurate 3D human motion capture. EventEgo3D++ leverages the LNES representation of event streams to enable precise 3D reconstructions. We have also developed a mobile head-mounted device (HMD) prototype equipped with an event camera, capturing a comprehensive dataset that includes real event observations from both controlled studio environments and in-the-wild settings, in addition to a synthetic dataset. Additionally, to provide a more holistic dataset, we include allocentric RGB streams that offer different perspectives of the HMD wearer, along with their corresponding SMPL body model. Our experiments demonstrate that EventEgo3D++ achieves superior 3D accuracy and robustness compared to existing solutions, even in challenging conditions. Moreover, our method supports real-time 3D pose updates at a rate of 140Hz. This work is an extension of the EventEgo3D approach (CVPR 2024) and further advances the state of the art in egocentric 3D human motion capture.

@article{eventegoplusplus,

author={Millerdurai, Christen

and Akada, Hiroyasu

and Wang, Jian

and Luvizon, Diogo

and Pagani, Alain

and Stricker, Didier

and Theobalt, Christian

and Golyanik, Vladislav},

title={EventEgo3D++: 3D Human Motion Capture from A Head-Mounted Event Camera},

journal={International Journal of Computer Vision (IJCV)},

year={2025},

month={Jun},

day={11},

issn={1573-1405},

doi={10.1007/s11263-025-02489-1},

}